Principles

Image-Based Automation Basics

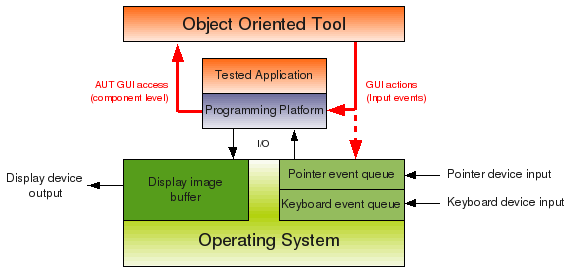

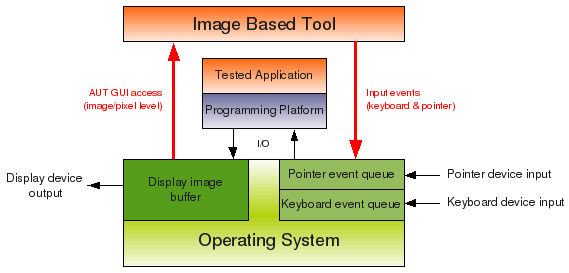

There are two approaches to black-box GUI automation, object-oriented one and image-based one.

For more information on Black Box Automation.

| Object-oriented automation tools are tightly integrated with a programming platform, for example, .NET, C/C++, Java or alternatively the OS graphic interface (Win32 API, ...). They can typically retrieve application GUI component hierarchies and provide access to properties of individual GUI components such as buttons, checkboxes, text fields, dropdowns etc. Advantage of this approach is that you see the GUI structure from your script and you can easily navigate through it or verify whether it has the expected content. Disadvantages of object-oriented tools are that they can not automate across different technologies, and they often rely on certain platform versions or application versions (and keep forcing you to upgrade as new versions get released). |

Image-based automation tools analyze images of the application or the whole computer desktop. They get the images usually from the underlying operating system, for example from the desktop image buffer or through attaching to the graphic driver. Advantage of this approach is that the underlying technology is irrelevant and the tool can automate any application that displays on the desktop of the operating system. Such automation is also simple, close to the end-user experience and easy to learn even without programming experience. It can also reveal layout problems in testing projects such as overlapping or ill-fitting GUI components. T-Plan Robot Enterprise is an image-based automation tool. Due to the nature of the way it performs the automation of the system, the tool itself in the industry is termed a Black Box Automation Tool. In essence, Robot provides image comparison methods, allowing to search for GUI or text components on the desktop, and verify the status of the tested application, by comparing against collected image templates or applying actions to these found components to drive workflow in RPA projects. Image-based automation is also termed within the industry as "Black Box" given its nature of applying the user input and verifying or applying the graphical (application) output. Black Box automation does not get involved with what occurs in between these two events in terms of how they are processed by the applications, what code branches were executed, object properties, etc. – this would be otherwise known as "White Box" automation. As T-Plan Robot interacts in this way, it connects to the SUA (system under automation) via the input (mouse/keyboard) event queue and the display image buffer. This is achieved primarily through the use of VNC (Virtual Network Computing) for connections to secondary machines or using the direct Java and OS API's for the local operating system in a local desktop setup. By comparing the displayed screen against a set of predefined image templates we can determine that the system is fit for purpose in the realms of UAT (User Acceptance Testing), or drive user actions for Robotic Process Automation (RPA). The key point here is that T-Plan Robot does not "see" the code of your application, which is never seen by the user, but instead what is delivered to the user via the screen. |

Image-Based Automation with T-Plan Robot

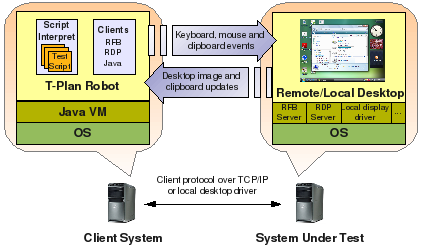

In the previous topic, we've established that T-Plan Robot is an image-based tool. Let's have a quick glance at its architecture.

| The tool works with desktop images retrieved through remote desktop technologies or any other technology which produces images. Although currently the tool supports static images as well as the RFB protocol (better known as Virtual Network Computing, VNC), and Remote Desktop Protocol (RDP, employed by Windows Terminal Services) and local graphic drivers, its architecture is open and other protocols are continuously developed. Remote desktop technologies typically operate in the client-server scenario, usually over TCP/IP. They allow accessing a remote desktop (server) through a viewer (client). The client sends local input events (mouse, stylus, keyboard, clipboard changes) to the server and receives desktop outputs, such as desktop image updates, server clipboard changes or even sounds played on the server. T-Plan Robot can be from this point of view defined as a "scriptable remote desktop viewer". It drives remote desktop either through user interaction (working as a normal viewer) or following instructions from a script. To make the automation possible it provides a set of features, such as scripting language (with support of Java), image comparison methods, reporting capabilities and smart GUI which makes writing and debugging of scripts easy. |

In static image scenarios the tool may be defined as a "scriptable image viewer" allowing to verify and/or analyze a static image using the same language and features applied to live desktops.

TIP: A more detailed T-Plan Robot architecture chart is available at the Java API home page.

VNC Technology Overview

As we've already mentioned, T-Plan Robot relies in live desktop automation scenarios on the Remote Frame Buffer (RFB) protocol. This technology is better known by the software implementing it called VNC, Virtual Network Computing.

VNC was originally developed at the Olivetti Research Laboratory back in 1999. The project was open sourced under GPL in 2002 and the technology spread through the IT industry. Today there are many variants of VNC software delivering various free or commercial extra features; most products however support at least the same basic functionality specified by the RFB protocol. For an overview see the Wikipedia article on VNC.

VNC operates typically in the client-server scenario over TCP/IP. The viewer (client) displays image of the server desktop and sends all your mouse pointer moves, clicks and drags and keys typed on the client machine keyboard to the server. The server sends updated pixels of the remote desktop image in return.